Organisational Evaluation of Beyond Blue

Beyond Blue is one of Australia’s most well-known, trusted, and visited mental health organisations.

Every three years, Beyond Blue engages an external evaluation team to conduct an independent evaluation of the organisation. In 2021, ARTD was engaged to design and deliver the fifth independent evaluation of Beyond Blue.

The evaluation covered Beyond Blue’s external facing activities during its 2020–23 strategy period. This included all key products and services, Beyond Blue’s work with people with lived experience as speakers, volunteers and Blue Voices members, and to a lesser extent, Beyond Blue’s other activities such as policy advocacy and research.

The challenge

The evaluation sought to answer 10 questions relating to Beyond Blue’s reach, outcomes, value for money, and role in the mental health ecosystem. This presented several challenges during the design stage.

- Defining the evaluand: Before you can evaluate the impact of something, you must first be able to define it. This is relatively straight-forward when conducting program evaluations. Defining the evaluand is much more complicated when conducting an organisational evaluation.

- Synthesising diverse outcomes data: We knew we’d have access to outcomes data for most of Beyond Blue’s products and services. However, these data were of a different nature (e.g., survey data, monitoring data, evaluation reports), and often covered different time periods. Plus, our evaluand was Beyond Blue, not their individual products and services.

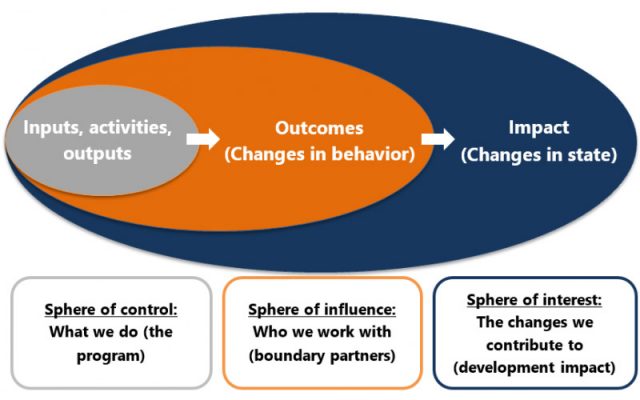

- Assessing impact in a complex system: Beyond Blue’s vision – that all people in Australia achieve their best possible mental health – is affected by a range of factors outside of Beyond Blue’s activities. During the 2020-23 strategy period, extended COVID-19 lockdowns, extreme weather events and a considerable increase in the cost of living all had an unprecedented impact on mental health and wellbeing.

Our approach

To navigate these challenges, we used a theory-based approach that incorporated:

- a systems-informed logic model, capturing Beyond Blue’s key business roles to clearly set the scope

- a mixed-methods data synthesis process to transparently synthesise various data sources and report on the scale of outcomes supported and the quality of the evidence of these outcomes

- a contribution analysis to strengthen the assessment of Beyond Blue’s contribution to mental health outcomes in the context of other factors within the system.

Mixed-methods data synthesis

We used an adapted mixed-methods data synthesis (MMDS) process[1] to assess and combine a diverse set of evidence to evaluate Beyond Blue’s outcomes.

The established MMDS process has four steps:

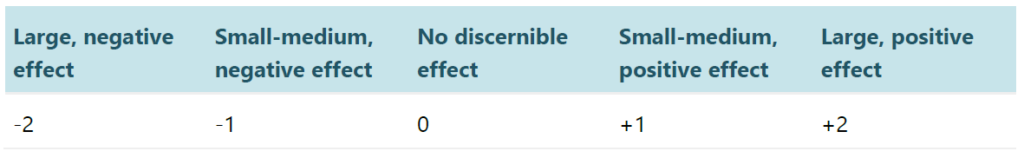

- Rate the size and direction of the program’s effect according to each data source.

- Rate the worth of each data source.

- Combine the size and direction and the worth for each data source.

- Aggregate these combined ratings to arrive at an overall effectiveness estimate.

As the process was designed for program rather than organisational evaluations, we only used the first two steps.

To ensure the MMDS process was transparent and robust, we undertook these steps with a working group that included members of the Independent Evaluation Advisory Committee (including one Blue Voice member) and additional Blue Voices members specifically recruited to the working group. This was done through two workshops.

Step 1: Rate the size and direction of the program’s effect according to each data source.

Reviewers assessed whether the outcome that was a focus for the evaluation was measured by the data source and, if so, the direction and size of the effect.

The draft outcome size scores were debated during Workshop 2 by an ARTD team member and an Independent Evaluation Advisory Committee or Blue Voices member and adjusted to reflect the discussion. ARTD then made a final decision about each score, consistent with our role as independent evaluators.

Step 2: Rate the worth of each data source for the purposes of the evaluation.

We established criteria of worth for the data sources for the purposes of the evaluation. The criteria used were:

- credibility: how rigorous the data source is

- confirmability: how reliable the data source is

- from those impacted: whether the data source comes from people who access the program or service

- representative: whether the data source comes from a representative sample that includes priority populations

- relevant: whether the data source measured outcomes relevant to the process and the time period aligned with the independent evaluation.

Each evaluation and monitoring data set was rated against the five criteria of worth using a rubric developed to clearly specify what each of the criteria looked like at every point on the scale. For each criterion, evaluations and monitoring data sets were given a score of either 1 (low), 2 (medium) or 3 (high).

The draft criteria of worth scores were debated during Workshop 2 by an ARTD team member and an Independent Evaluation Advisory Committee or Blue Voices member and adjusted to reflect the discussion. ARTD then made a final decision about each score, consistent with our role as independent evaluators.

Contribution analysis

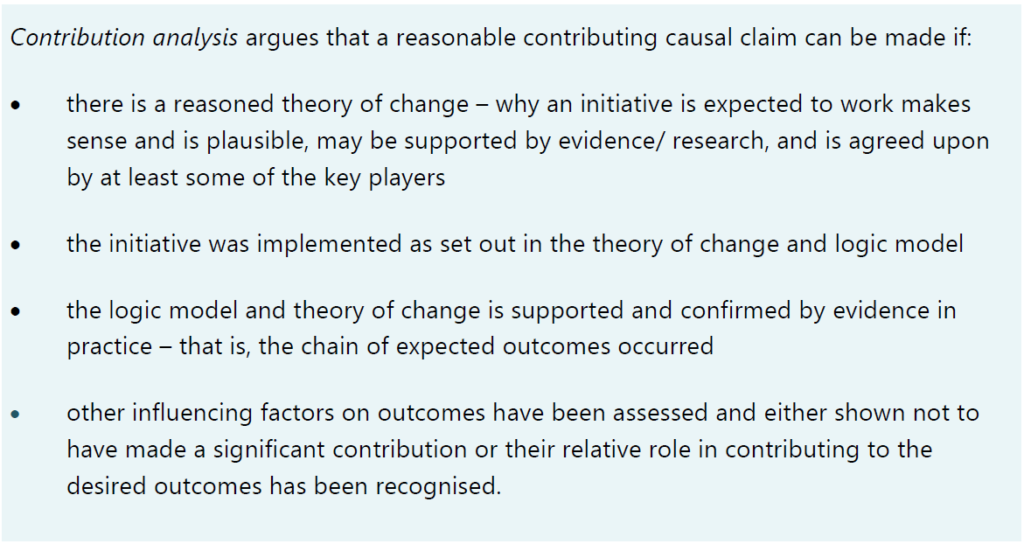

The other key aspect of our evaluation design was the use of contribution analysis.

Contribution analysis provides a formal approach to assessing the contribution an initiative is making to observed results by assessing the theory of change behind it and the other factors that may be influencing observed outcomes.[2] This was particularly important for Beyond Blue, given it aims to contribute to high-level outcomes for individuals, communities and systems that are influenced by a range of other organisations, government decisions, and external factors.

The ARTD evaluation team developed a modified version of a tool called the Relevant Explanation Finder[3] (REF) to systematically identify the key external factors that influence Beyond Blue’s intended outcomes, how they are likely to influence outcomes and how their influence can be measured. ARTD drafted a long list of factors that could influence the outcomes to which Beyond Blue aims to contribute over the 2020–23 strategy period, which was narrowed down to the most relevant through a workshop with Beyond Blue staff.

The Independent Evaluation Advisory Committee reviewed how each factor was expected to influence outcomes and identified additional data sources for each factor. We later used the REF to help understand Beyond Blue’s contribution to higher level outcomes in the context of external factors and to understand Beyond Blue’s role in the ecosystem.

Impact

Our evaluation identified the important role Beyond Blue has in the mental health ecosystem, the organisation’s biggest impact as reducing stigma and encouraging help seeking, and their advocacy as important for raising mental health awareness and for normalising conversations about mental health and wellbeing. We also identified opportunities to strengthen reach, impact and data for Beyond Blue to consider. Beyond Blue accepted all 15 suggestions and committed to incorporating these into their workplans where they weren’t already.

References:

[1] McConney, A., Rudd, A., & Ayers, R. (2002). Getting to the bottom line: A method for synthesizing findings within mixed-method program evaluations. American Journal of Evaluation, 23(2), 121-140.

[2] Mayne, J. 2012. Contribution analysis: Coming of age?. Evaluation, 18(3), 270–280.

[3] Biggs, J., Farrell, L., Lawrence, G., & Johnson, J.K. (2014). A practical example of Contribution Analysis to a public health intervention. Evaluation 20(2), 214-229. https://doi.org/10.1177/1356389014527527