Hubs, spokes and networks in government evaluation approaches

Kate and Ken recently attended an AES panel discussion on Whole of Government Evaluation Approaches, with representatives from NSW, VIC, NT, QLD and ACT.

It was interesting to hear the differences and similarities in approaches taken by states and territories towards evaluating government programs and policies. While most were taking a Whole of Government approach to evaluation, Victoria don’t have a central agency guiding expectations of evaluations across government, but work in a more networked way. Eleanor Williams, Chair of the Victorian Public Sector Evaluation Network and Deputy Director, Research and Advisory ANZSOG, suggested that this less prescriptive approach can have the benefit of diversity, with different agencies able to do things in different ways, but does mean there is less consistency in how and how regularly evaluations are done and less coordination to allow evaluators to benefit from other evaluations being undertaken or planned.

There was also discussion about the evidence for the effectiveness of a Whole of Government approach (being an audience full of evaluators and commissioners of evaluations, evidence for the model was bound to come up). Much of the current reasoning for Whole of Government frameworks has arisen from audits, fiscal measures and the 2019 review of the Australian Public Service, which looked at key considerations and evidence for different approaches to evaluation in government (e.g. a hub and spoke approach, with central agency in place to coordinate evaluation, but at a step removed to allow different departments to use their own local expertise in commissioning and conducting evaluations versus a centralized evaluation function, versus an entirely decentralized model)[1].

Capability building was a strong theme across the jurisdictions, provided through both formal and informal learning approaches. Ways in which the state and territory governments represented on the panel have been able to learn about and help develop their evaluation knowledge and skills include establishing local/ state communities of practice; sharing learnings and evaluation experiences; peer coaching; establishing an evidence and evaluation learning academy (ACT); using AES events and webinars; taking a hybrid approach to evaluation, conducting part of the evaluation in house and bringing in and learning from external support; and publishing evaluation findings and reports. For example, the Northern Territory, which has a relatively new evaluation unit within its government, indicated how much they have been able to learn from NSW using their resources, formal and informal discussions and the sharing of evaluation projects and experiences.

The panel identified the value both of building internal evaluation capabilities to conduct evaluations in-house, and using external independent evaluators, and that a mix of both can be useful. What is effective depends on the specific context of each evaluation including resources and finances available, expertise of in-house staff, opportunities for capacity building and learning from any external consultant(s) and other factors. However, when outsourcing, it’s always necessary to have someone in-house to be responsible for driving the evaluation, communicating expectations and, if the external evaluator is not brought on at the very beginning of the project, ensuring monitoring data is collected. We always find such collaborative working relationships with clients, where we share knowledge from our respective positions, brings additional value to evaluations.

The panel also noted the value of bringing evaluators (whether inhouse or external) in early. In our experience, doing so enables evaluative thinking to inform design, ensures there is early data to inform decisions about in-flight changes or continuation, and sets the evaluation up for success.

It was great to hear about some of the recent developments emerging in government evaluation approaches, responsive to the local context. For example, the Northern Territory has instituted a master list of agencies’ programs as part of their budget planning. This allows them to have oversight of potential program and policy linkages, as well as opportunities to run concurrent or collaborative evaluations, which can help to ensure that particular groups of people are not approached for the same or similar data repeatedly (a great way to avoid evaluation fatigue, we think!). It can also lead to evaluations being better planned and timed to capture feedback and insights from different programs and stakeholders contributing to the delivery of a particular policy.

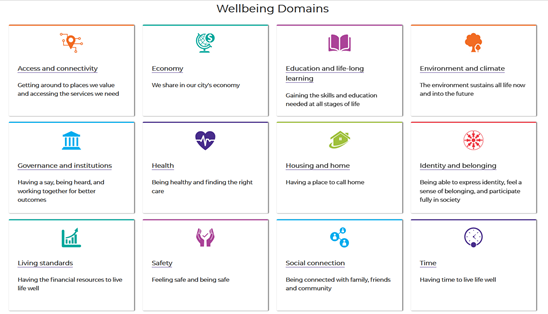

The ACT have embedded a Wellbeing Framework (below), developed in consultation with the Canberra community, within their evaluation framework. The Framework was published in 2020, with a publicly available dashboard of progress against the indicators released in 2021 (available here). We’ve previously written about the importance of democratising evaluation, and think this is an excellent example of collaboratively engaging citizens in policy and evaluation and then evaluating programs drawing on indicators that are clearly important to the local population.

The Wellbeing Framework and dashboard are also intended to help agencies connect the dots across programs and think about evaluation across agencies, and is the first step towards embeding a wellbeing lens across policy and budget processes.

It was clear from this panel that there’s been a lot of valuable sharing and cross-state as well as intra-state learning from evaluations. We hope there are more opportunities to learn from the States as their approaches continue to develop and evolve (including through the AES Australian Public Sector Evaluation Network Special Interest Group).

References

[1] Independent Review of the APS. Appendix B: Evaluation in the Australian Public Service: current state of play, some issues and future directions. www.apsreview.gov.au/resources/evaluation-and-learning-failure-and-success