Fit for purpose evaluation #1 – the big questions

The concept of ‘fit-for-purpose’ evaluation has been around for a long time, I remember publishing guidance with Patricia Rogers on this concept back in 2015, but it is having a moment again. A few months ago, I was asked to present to a policy roundtable: Advancing policy and program evaluation in Australian Government, by the Academy of the Social Sciences in Australia and Australian Centre for Evaluation. I had to opportunity here to present some ideas to the Hon Dr Andrew Leigh MP.

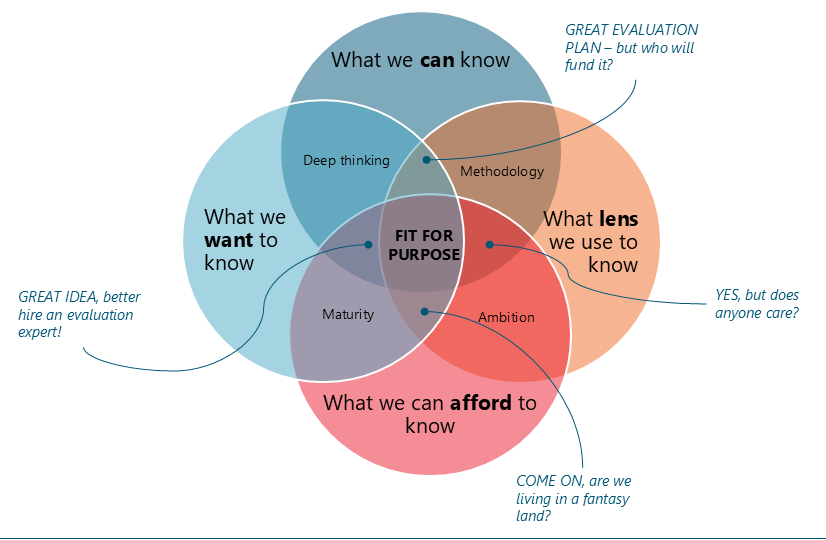

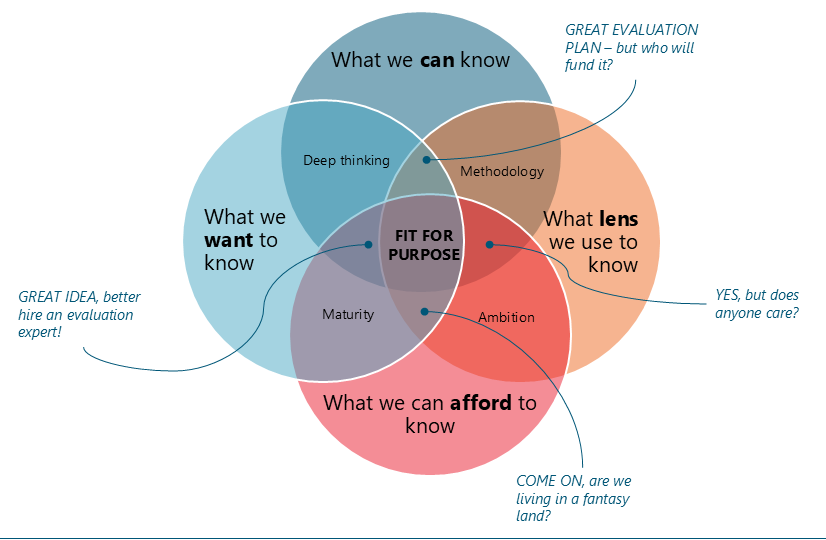

I was asked to present on the concept of fit-for-purpose’ evaluation. I argued that it was the intersection of what you can know, what you want to know, and what you can afford to know. Elliot Stern and others have talked about this for a long time – and while I didn’t talk about this at the roundtable, I wanted to add the ‘lens’ we use to know. I think this consideration is worthy of deeper academic interest, and important to consider the utility of the scientific paradigm we are using to do an evaluation. My view is that our paradigm should not be fixed by habit, but that good evaluators will aspire to adopt different paradigms, or lenses, that are more or less fit for purpose in different situations.

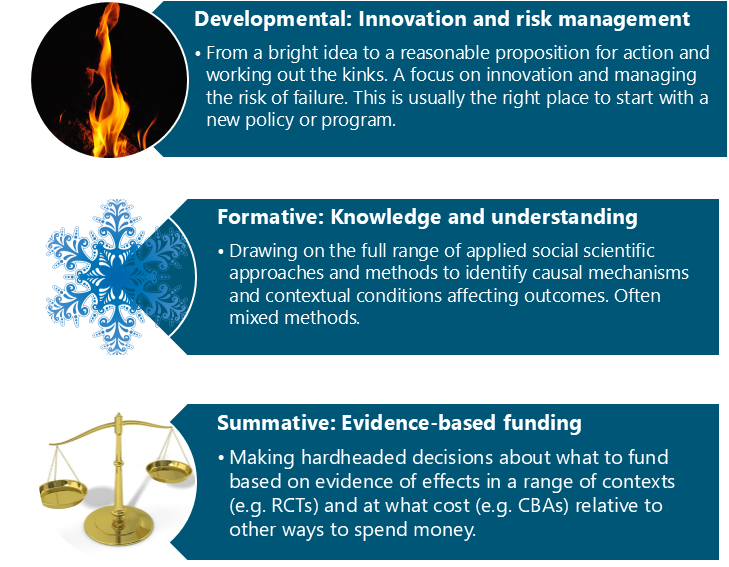

- This blog post is about the big questions to ask in considering a fit for purpose evaluation. The basic idea is that most policies or programs will mature over time and so should the approach to evaluation to ensure it is fit for purpose; from developmental, to formative and summative.

- The next blog post will be about the importance of thinking about the lens you choose to view the evaluand.

- The third blog will be a structure and process for doing developmental evaluation, and will feature a Q&A and a scenario to help apply the decision making process about what is a fit for purpose evaluation, and considerations for developmental evaluation.

”Life can only be understood backwards; but it must be lived forwards.” Søren Kierkegaard

Reliable and actionable insights within the constraints.

Evaluation is difficult. Evaluation needs to serve a wide continuum of initiatives from innovative, unformed and messy ideas to established best practice. It must always be fit for purpose. It must be a deeply pragmatic field. The right evaluation approach isn’t always the most rigorous or comprehensive – it’s the one that gives you reliable and actionable insights within your constraints.

Evaluation and the public policy it serves is very complex – don’t beat yourself up too much about your choices, or what is outside your control – but do be reasonable and think as deeply as you can.

“It is the mark of an educated mind to rest satisfied with the degree of precision which the nature of the subject admits and not to seek exactness where only an approximation is possible.” Aristotle

Fit for purpose evaluation must start with the purpose of an evaluation. This must start with what we can know about it as well as what we want to know about it. We must understand the nature of the intervention and its stage of development. Then, we must consider the questions being asked and the resources available. It must generate useful and reliable information for decision-making, accountability and learning. We have to choose a lens through which to look at it – we can’t look at “everything all together all at once”. When we make choices and do evaluation, we must be mindful that an evaluation should not cost more than the value of the information obtained.

What are the intersections?

- What we can know depends on what ‘it’ is and its current level of development – perhaps it is fully formed, unformed but forming, or endlessly dynamic. We match this to a type of evaluation.

- What we want to know should be based on the decisions we need to make or the overall purpose and key evaluation questions, for example

- Should we be doing this?

- How can we improve this?

- Does it work, for whom under what circumstances and to what extent?

- The lens we look through is our ‘paradigm’ and affect our ‘biases’, ‘what we see in an evaluation

- Realist

- Empirical – post-positivist

- Constructivist

- Pragmatist

- Systems

Also ask, what role am I playing, what are my values what perspective do I hold, what is my bias?

- We can only afford to know as far as our resources allow us

- Money

- Time

- Skills

Evaluation should not cost more than the value of information obtained.

In the ideal world an evaluand will progress from developmental, to formative and then summative evaluation, but things are rarely ideal!

- If the Minister says ‘I just want to know what works’ then identify the stage of development and proceed.

- If the Minister says ‘make this idea work’ then go developmental.

- If the Minister says ‘how can we improve this?’ go formative.

- If the Minister says ‘I need evidence to keep funding this’ then go to summative.

Read the second blog in this series here.