Don’t train and hope: Lessons from delivering and evaluating training programs

I’m a big believer in the potential of training programs. I’m part of a company that provides everyone with a personal training budget and company-wide training days, has lunchtime learning every Wednesday, and supports Australian Evaluation Society programs. Training is part of the lifelong journey that is evaluation.

But I get it when my colleague, Brad Astbury, says the biggest problem with training programs is the philosophy of ‘train and hope’. Indeed, in the early stages of my career I attended training that I found interesting enough, but I failed to find ways to apply it.

The Kirkpatrick model

Kirkpatrick is a foundational author on training evaluation – he first published in 1959 before releasing the first edition of Evaluating Training Programs in 1994. While details have been revised, the four levels of his model remain the same.

- Level 1: Reaction: To what extent do participants find the training engaging and relevant to their jobs?

- Level 2: Learning: To what extent do participants acquire the intended knowledge, skills, attitude, confidence and commitment through participation in the training?

- Level 3: Behaviour: To what extent do participants apply what they learned during training when they are back on the job?

- Level 4: Results: To what extent do targeted outcomes occur as a result of the training and of the support and accountability package?

At a glance the levels make intuitive sense. And there must be something to them because the book is now in its Third Edition. However, there has also been a lot of criticism of Kirkpatrick’s model.

The gaps in Kirkpatrick

Bates (2004) sets out three key criticisms of Kirkpatrick’s model.

- The model is incomplete because it does not consider important delivery, individual and contextual influences, such as the learning culture of the organisation and the nature of support for skill acquisition and behaviour change.

- There is an (implied) assumption of causality between the levels.

- There is an assumption that information at each level is more important to understand effectiveness than the last.

These are relevant concerns. One of the key things that stands out to me is that the link between reaction and learning is tenuous. Do you need to enjoy training to learn? Maybe not. Some lessons are best learned by getting uncomfortable. For example, last year at the Australian Evaluation Society Conference, I went to a two-day training on cultural safety and respect with Sharon Gollan & Kathleen Stacey – we had to feel uncomfortable to begin to confront institutional racism and unearned privilege. I also think I could learn from training that wasn’t particularly engaging if it was content that I was required to master for my role. That’s not to say that being engaging is not at all important, particularly when you’re in the business of fee-for-service training.

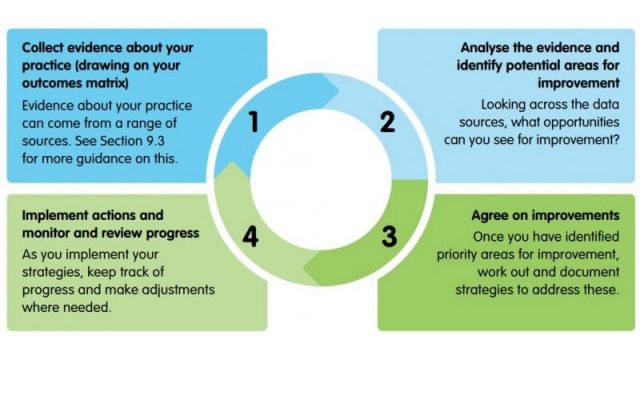

The leaps between learning, behaviour and results are also large. For this reason, Thomas Guskey has added another layer – organisational support – to Kirkpatrick’s model. This is important to making the leaps and avoiding the perils of the philosophy of train and hope.

To add to these concerns, I feel there is a level missing at the bottom of the model. Before you have reaction, you must have reach. This is why I find Funnel and Rogers (2011) information/ education program logic archetype useful. It is important to understand reach because without it you wouldn’t have a training program, and because your means of achieving reach may also influence your learning. Is the training mandated or voluntary? Is it crucial to your daily work and life or more peripheral? This might influence how you react and learn, or affect the level of organisational support to help embed learnings in practice.

So how do we avoid the philosophy of train and hope?

At ARTD, we think carefully about the training programs we access – not only whether they come recommended, but whether it is the right time to access this particular training, and whether we will be able to come back and integrate it into our practice. We also have a commitment to share what we’ve learned – through this blog and internal learning sessions. This is not only useful because it supports a culture of learning and an environment of organisational support, but because teaching others helps us master new knowledge.

When we deliver training, it is applied. We don’t just teach the concepts but give people the opportunity to practice them. Our preference is to provide follow-up mentoring to support the leap between learning and behaviour in daily practice. In a recent training session, I also channelled my behavioural insights colleagues, using a commitment device: I asked everyone to share aloud one thing they were going to change in their practice after this session. I’m keen to see how this one works!

References

Bates, R. (2004). ‘A critical analysis of evaluation practice: the Kirkpatrick model and the principle of beneficence,’ Evaluation and Program Planning vol 27, pp341–347.

Kirkpatrick, D.L. (2006)., Evaluating Training Programs

Funnel and Rogers. (2011). Purposeful program Logic.

The evaluation exchange. (2005-06). ‘A Conversation with Thomas R. Guskey’. Harvard Graduate School of Education.